The Media Arts and Technology

End of Year Show 2025

by Devon Frost

Handwoven cotton + alpaca wool

[Elings | SBCAST]

by Devon Frost

Handwoven tencel

[Elings | SBCAST]

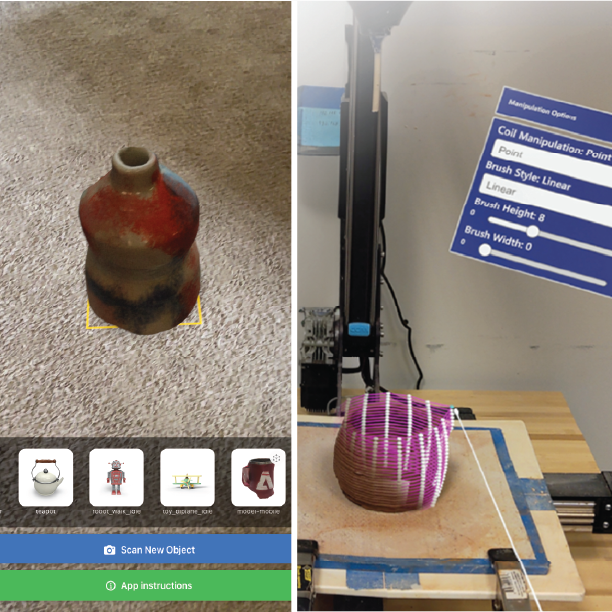

by Ana Maria Cardenas and Joyce Passsananti

These are two experiments on the potential of AR to support creative expression in manual crafts and social interactions. One experiment brings traditional ceramic craft techniques into digital fabrication workflows. The other explores creating AR 3D and 2D content in shared environments with multiple users.

[Elings]

by Ashley Del Valle and Emilie Yu

texTile explores a future of clothing shaped by care, creativity, and reusability. Combining traditional crochet with computational design and 3D printing, this work reimagines the modular potential of granny square garments—a longstanding handcraft practice often overlooked in conversations about sustainable fashion. Crochet motifs, or “tiles,” are joined not by thread but with flexible, digitally fabricated connectors made from TPU filament. These connectors make it easy to assemble, disassemble, and reconfigure garments without damaging the handmade motifs. Visitors can explore a range of possible garment layouts generated by a custom software tool, which adapts patterns to different bodies and creative goals. Rather than discard or finalize, this project proposes that clothing can evolve—carrying both memory and possibility. texTile invites reflection on how computational tools might extend not only the lifetime of textiles, but the joy and agency of those who make them.

by Ashley Del Valle and Dana Jones (designer of the Quilt pattern)

Fabric Peacing is a multimedia quilt installation that combines traditional textile craft with animated projection to reflect on memory, loss, and the urgent need for peace. The quilt, designed by Dana Jones, features six paper cranes inspired by the story of Sadako Sasaki, a young girl who developed leukemia after the atomic bombing of Hiroshima. Following a Japanese legend, Sadako folded paper cranes in hopes of healing—believing that folding 1,000 could grant a wish. Her story, shared in Sadako and the Thousand Paper Cranes, has become a lasting symbol of peace and resilience. Over the quilt, projections trace the cranes’ outlines in motion—suggesting energy, flight, and transformation. These fleeting lights bring the stillness of the fabric to life, echoing the artist’s own longing to create peace for herself and others. This work invites viewers to consider what peace means, who gets to experience it, and how we can protect it for all.

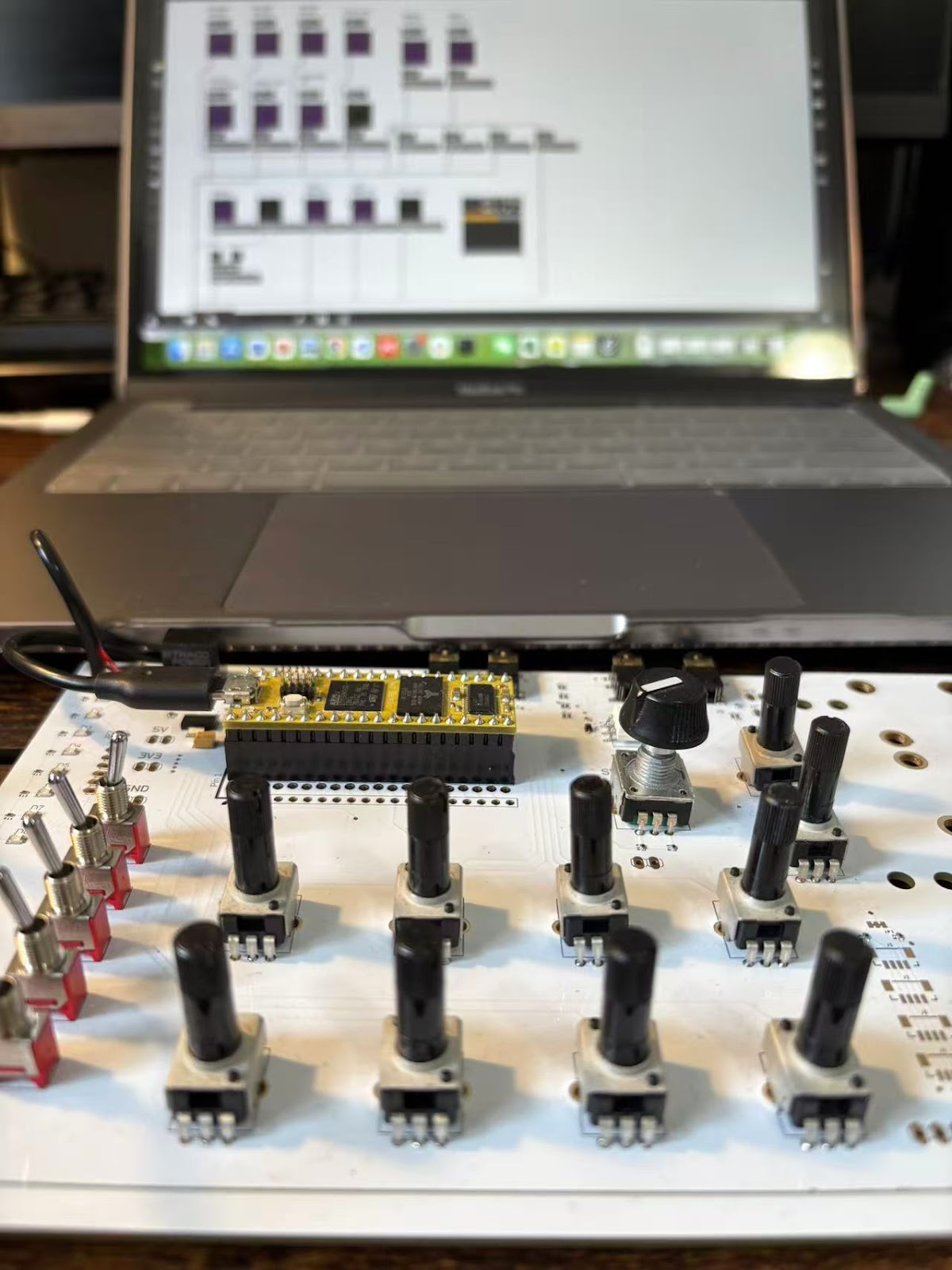

by Yuehao Gao

This project features a controllable DJ interface that relies on Max MSP’s “gen” system as well as an Oopsy board. Specifically, one shall control the ten knobs and the five switches on the Oopsy board to achieve live adjustments of the musical parameters, and to start or to pause certain musical features. These features include the chordal progression, the intensity of the LFO, the density of the main melody notes, a low-pass filter, the flanger effect, and the volumes of each channel. The DJ system features a combination of melodies, middle chords, bass notes, and percussions. As the Oopsy board is known for its diversity of possibilities in creating fast, efficient live signal processing, the main goal of this project is to explore the musicality of “hard circuits.” Additionally, inspired by Sabina’s musical project during last year’s EoYs, which brought many participants to start dancing, I also aim to come up with this project to bring a more lively vibe to this year’s EoYs.

https://gaoyueh8.wixsite.com/home

[SBCAST]

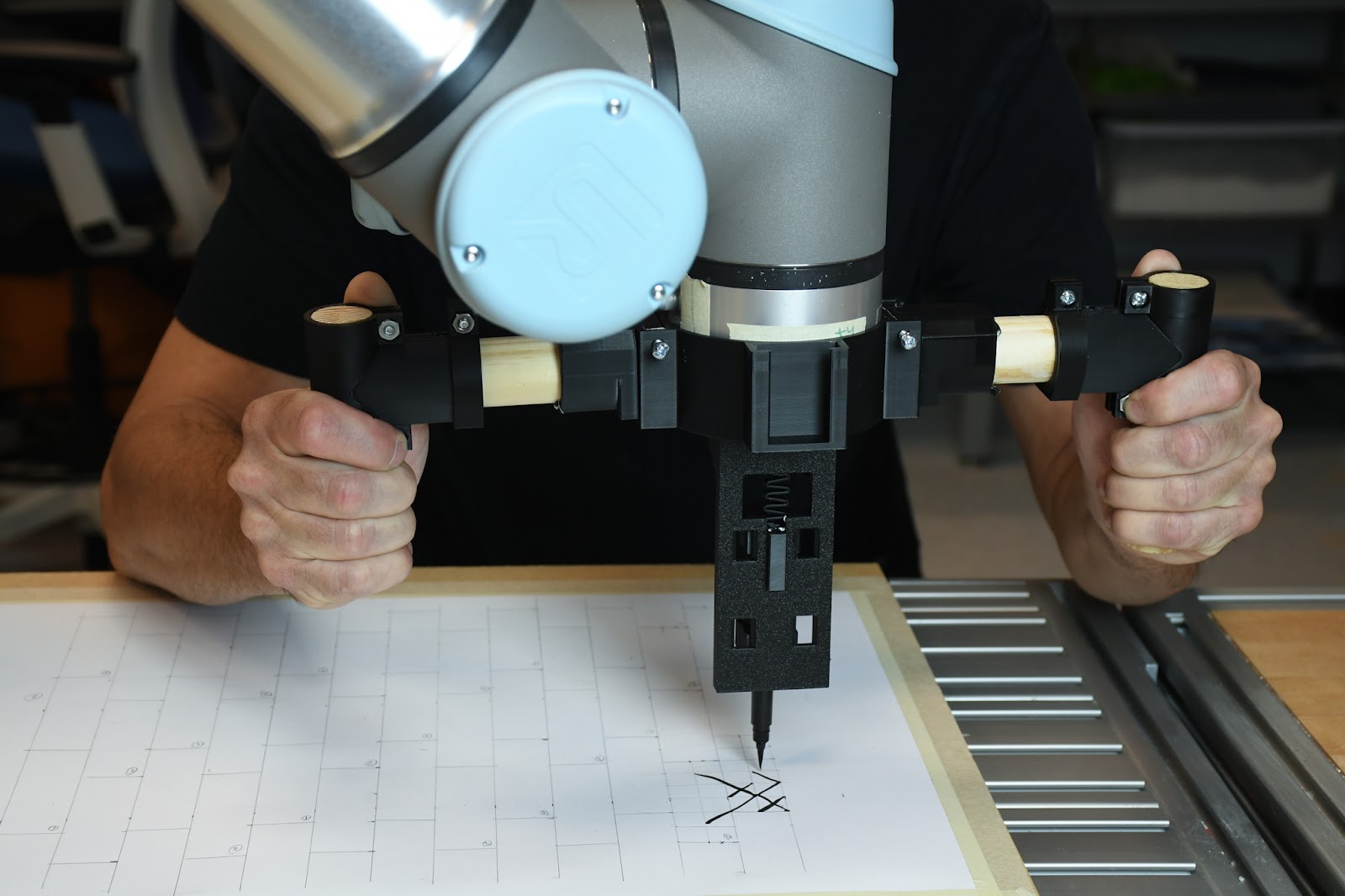

by Sam Bourgault, Alejandro Aponte, Megumi Ondo, Emilie Yu

The WORM is a system that enables artists to program a robotic arm by manually moving the robot through craft actions. These artifacts were made using this manual-computational approach.

[Elings | SBCAST]

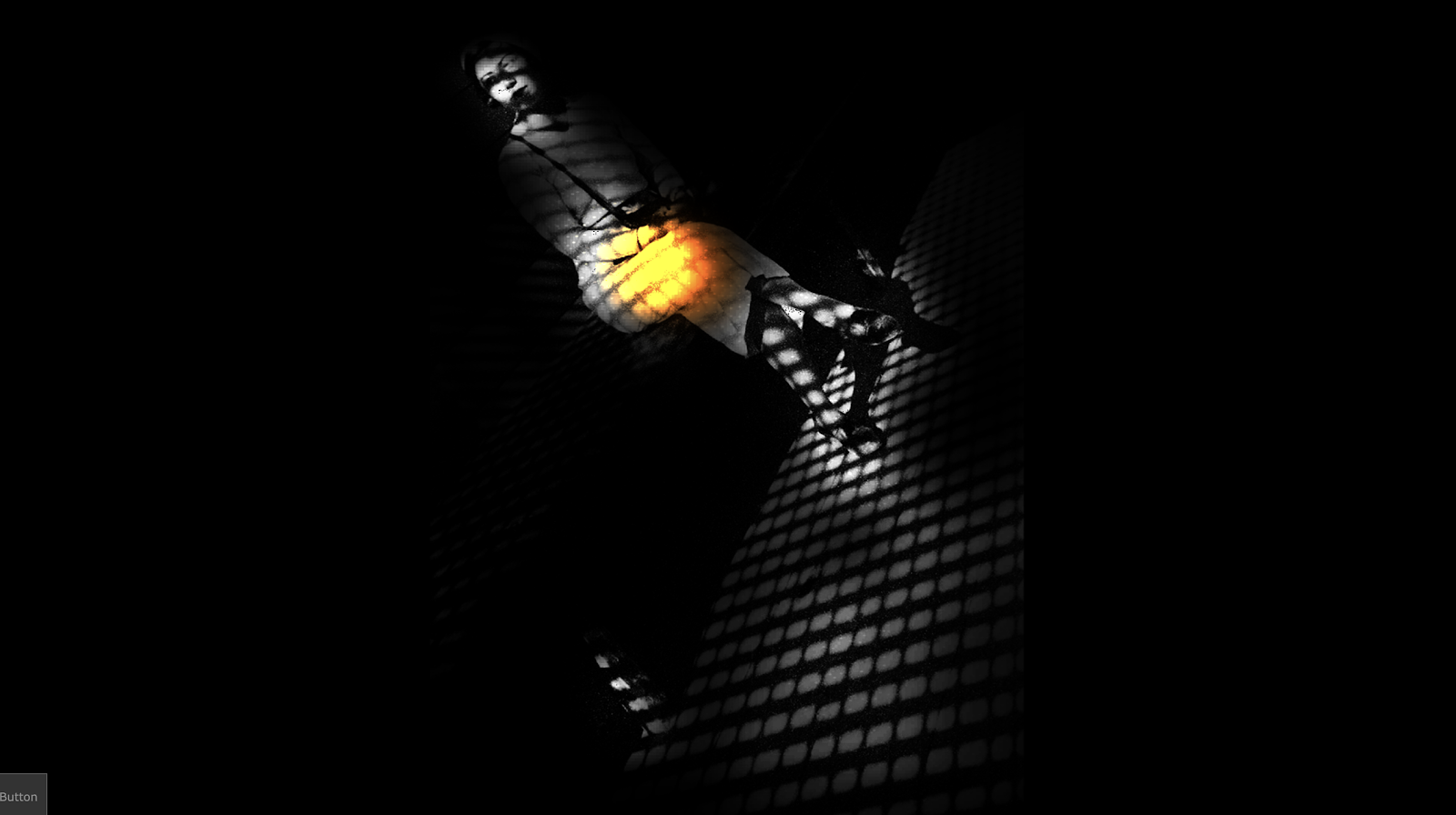

by Lucian Parisi

An audiovisual album of psychedelic, granular chamber music. The experience explores perceptual distortion, light, and physical materials. All work was composed, performed, and coded by Lucian Parisi. This album is being released in the Allosphere and will be on streaming services after the show.

[Elings]

by Mert Toka

Distributed Agency Trails is a creative system for designing and fabricating unique clay forms by navigating the tension between computational precision and material unpredictability. It explores emergent form-making—how complex shapes arise from simple interactions—through the interplay of digital simulation, human decision-making, and the active behavior of clay. At its core is custom software in which a virtual agent generates a spiral path, balancing between an ideal trajectory and the attraction of its own past track. Real-time tuning of parameters introduces digital perturbations (deviations, buckling, intersections) that become toolpaths for a clay 3D printer. As these paths are printed, clay’s behavior (slumping, swelling, shrinking) amplifies the digital traces. Human intervention during and after printing further shapes the outcome. The final artifact emerges from the entanglement of computational, material, and human agencies. This exhibit presents this system through 3D-printed and kiln-fired ceramics, plotter-drawn toolpaths, and video documentation. Distributed Agency Trails highlights the subtle collaboration between craft and code, showing how meaning emerges through negotiation between intention, instruction, and matter.

[Elings | SBCAST]

by Weihao Qiu and Shaw Yiran Xiao

Attention is the new currency in the age of big data, social media, and short video platforms. Technology companies relentlessly collect and decode our visual preferences and cognitive patterns, designing systems that sustain our engagement in their digital ecosystems—monetizing our watch time through algorithmically-curated ads. With vast streams of attention traces harvested from personal devices, AI models have evolved to the point where they can anticipate our gaze before it even occurs. Attention Divergence is an interactive installation that records the viewer’s eye movements as they observe an image, then juxtaposes these gaze patterns with attention predictions generated by an AI model. The divergence between human and machine attention—or alternatively, the model’s predictive success—is materialized as poetic 3D visualizations, informed by the viewer’s individual gaze. By revealing the mechanics of attention tracking and algorithmic prediction, Attention Divergence invites viewers to reflect on the infrastructures of surveillance embedded in digital life. It offers a contemplative space to question, resist, and reimagine the commodification of human attention in an algorithm-driven world.

[Elings]

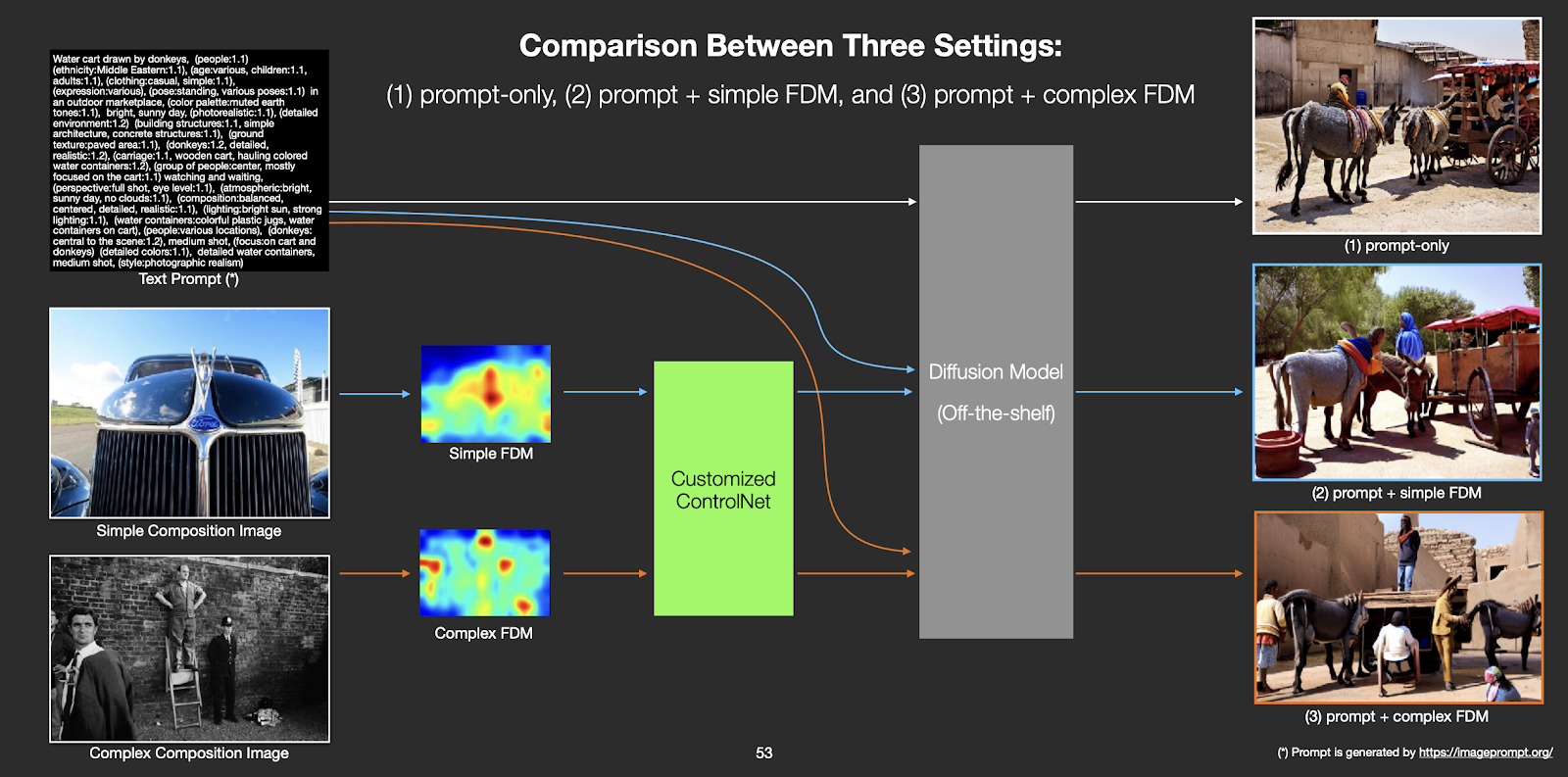

by Weihao Qiu and Shaw Yiran Xiao

Since Henri Cartier-Bresson proposed the concept of the decisive moment, theories and practices of photographic composition have advanced significantly, forming the foundation of modern photographic studies. Today, AI-generated imagery appears to be replacing traditional photography, owing to its remarkable ability to produce visually detailed and technically flawless results. However, such images often display a consistent bias toward symmetrical layouts, centered subjects, and shallow depth-of-field effects. These tendencies make it difficult to exert precise compositional control through text prompts alone—particularly when striving to recapture the traditional ideals of visual dynamism and balance. This project introduces an alternative approach that leverages attention prediction models to produce fixation density maps as a representation of image composition. These maps serve as the basis for constructing a training dataset for a ControlNet architecture, allowing diffusion models to learn how to generate images conditioned on attention-based compositional structures. Through this training process, the model acquires the capacity to interpret attention maps as visual guidance, restoring a degree of compositional agency that text-to-image generation alone cannot provide

[Elings]

by Shaw Yiran Xiao

NeuralLoom II is an interactive narrative installation that transforms internal AI computations into time-based stories of machine perception and visual reasoning. It invites the audience to explore the imaginative logic behind machine-generated visuals. Rather than presenting AI images as sudden, finished products, the work traces how visual meaning gradually forms inside a model like Stable Diffusion. In doing so, NeuralLoom II challenges the idea that AI art is instant and opaque. It encourages viewers to slow down, reflect on how machines see, and consider how perception, narrative, and imagination are reshaped in the age of generative systems.

[Elings]

by Deniz Caglarcan, Iason Paterakis, and Nefeli Manoudaki

https://www.iasonpaterakis.com

[SBCAST]

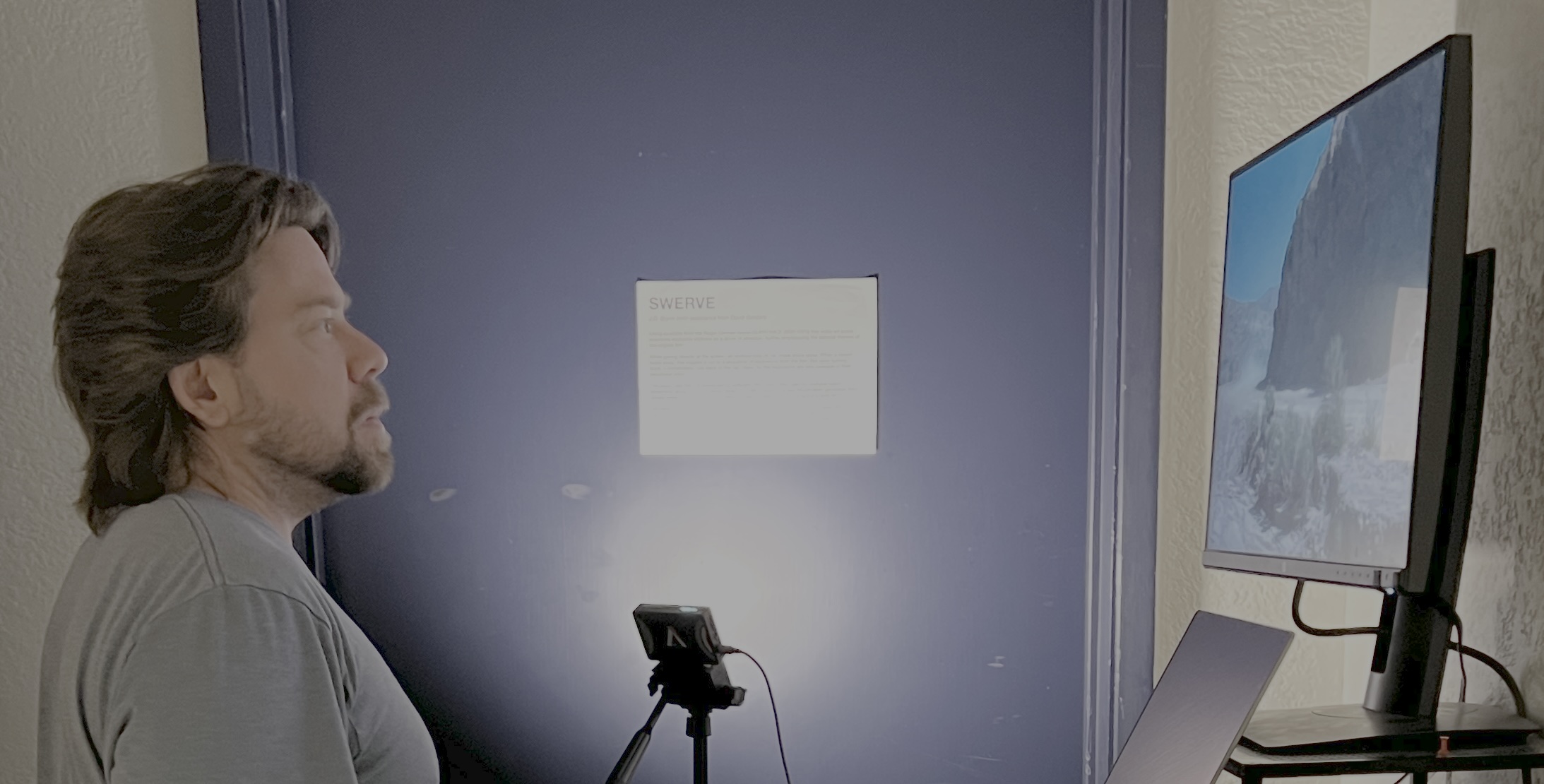

by J.D. Brynn

SWERVE uses a brief excerpt from Roger Corman’s Death Race 2000 (1975) to explore violence as a mechanism of attention. This interactive video installation emphasizes the original film’s satirical edge by destabilizing how the violence is consumed.

While the viewer maintains eye contact with the screen, an endless loop of car chases plays. The moment they look away, the scene cuts to explosions—but as soon as they turn back, the image reverts. The piece traps the viewer in a loop of frustrated desire: the violence exists, but only just outside the frame of attention.

[SBCAST]

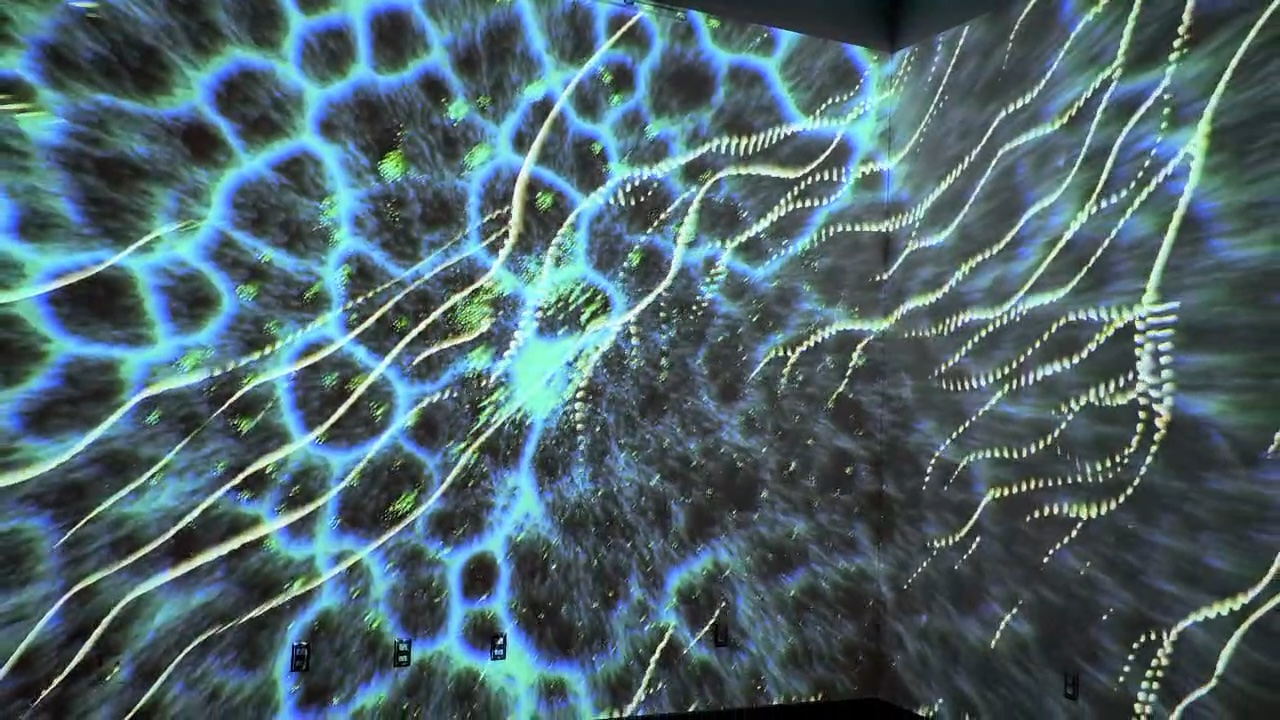

by Mert Toka, Iason Paterakis, Nefeli Manoudaki, Diarmid Flatley

Simulacra Natura is a collaborative media installation exploring the entanglement of digital morphogenesis and the interplay of biological and artificial intelligences. The title (Latin for “simulacra of nature”) references the simulacrum: a representation that distorts or replaces reality. The installation merges computational, material, biological, and human creative agencies. At its core, brain organoids—living clusters of neurons grown in the lab—drive simulated ecologies of agents such as flocks, slime molds, and termites. These agents exhibit collective intelligence shaped by neuronal activity and interaction. Spontaneous organoid firings modulate visual, sonic, and spatial behaviors, unfolding across layered sensory outputs: generative visuals, spatial audio, living plants, and sculptural forms shaped by algorithmic growth and digital fabrication. This mediated ecosystem invites viewers into a co-evolving space where synthetic and organic forms converge. Simulacra Natura reframes cognition and agency as distributed phenomena -emerging through entangled processes involving neurons, algorithms, organisms, and environments. In the context of Deep Cuts, the work reveals hybrid systems forming at the edge—between simulation and sensation, machine and matter, biology and code.

https://www.iasonpaterakis.com

[Elings]

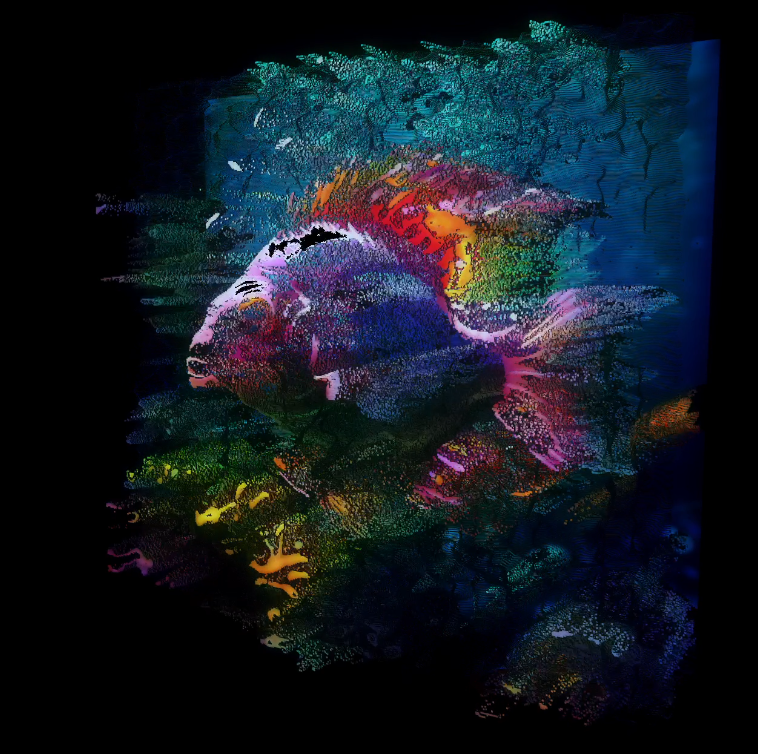

by Deniz Caglarcan

Itera is an audiovisual work that explores the evolving relationship between gesture and texture through iterative transformation. Built upon AI-generated visuals and parametric fractal structures, the piece constructs a dynamic world where sound and image continuously reshape one another. Drawing its name from iteration, Itera reveals a process in which each repetition diverges—transforming and unfolding into new visual and sonic forms. A formal dichotomy underlies the visual composition, where parametricism and AI-driven aesthetics contrast continuity with abrupt disruption. The result is a constantly shifting mechanism that invites the viewer into the liminal spaces between each transformation.

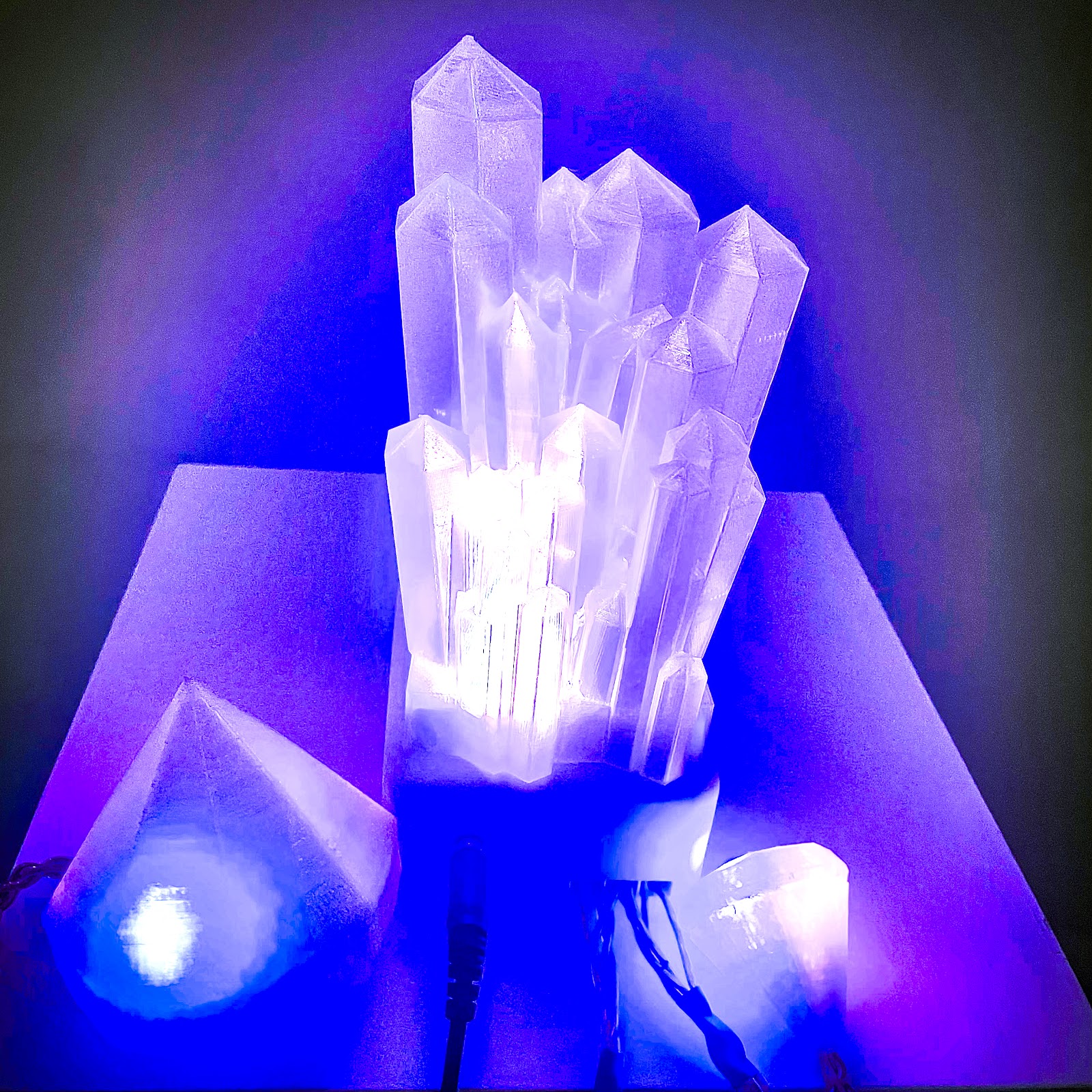

by Jazer Sibley-Schwartz

Building on the misunderstood research of binaural beats as a medical treatment, this device pioneers a new approach to sound healing through the use of trinaural beats — Crystal Phealing™️ Centered on a fundamental pitch at the 9th undertone of A=432hz, precisely tuned frequencies and synchronized haptic pulses work in concert to open your third ear, which is located at the nape of the neck. This leads to recurring states of percutaneous frisson, and deep Crystal Phealing™️. Instructions for maximum effect: 1. Listen with the headphones 2. Place the large crystal against your sternum 3. Hold the smaller crystal in your dominant hand This completes the bio-resonant circuit, allowing trinaural vibrations to pass freely through your fascia and into the laminar auditory plexus, a neural interface thought to mediate subconceptual acoustic entrainment. Users report energetic realignment, sudden toxin purging, and a vibrotactile locus of magical trans-dimensional thinking - Crystal Phealing™️ $15,634.00 (one-year in-state tuition at USCB)

[Elings | SBCAST]

by Mert Toka

The Edge of Chaos is a computational art project that visualizes the intricate beauty of complex systems. The title references Langton’s concept of “the edge of chaos,” the critical zone between stability and disorder where complex behaviors emerge. It’s a space where computational systems exhibit the highest potential for adaptation, creativity, and life-like transformation. Using cellular automata, slime mold agents, and flocking algorithms, it simulates a dynamic digital ecosystem where virtual species interact, adapt, and evolve in response to their environments. These agents form interdependent relationships, gradually shaping emergent structures that resemble gardens, cities, or labyrinths. Through layered simulations and fine-tuned parameters, The Edge of Chaos generates complex abstract formations—revealing how simple rules and local interactions produce organic beauty at the threshold between order and chaos. The project celebrates emergence as both a generative force and an aesthetic principle. It invites viewers to reflect on how complexity, adaptation, and interdependence give rise to form and behavior—whether in nature or code. An interactive version is also exhibited within Simulacra Natura, where it responds to real-time neuronal activity from living brain organoids. In dialogue with Deep Cuts, The Edge of Chaos illuminates the edge itself: where phase transitions unfold, where structure and entropy mingle, and where generative cracks open between systems.

[Elings | SBCAST]

by Yifeng Yvonne Yuan

Field Poetry is an interactive generative sound box based on Arduino and Max Msp. It transforms environmental data into a living sonic experience. Inspired by the subtle interactions between nature and sound, the piece functions like a digital wind chime that breathes with its surroundings and reflects the ever-shifting rhythms of the environment.

[Elings | SBCAST]

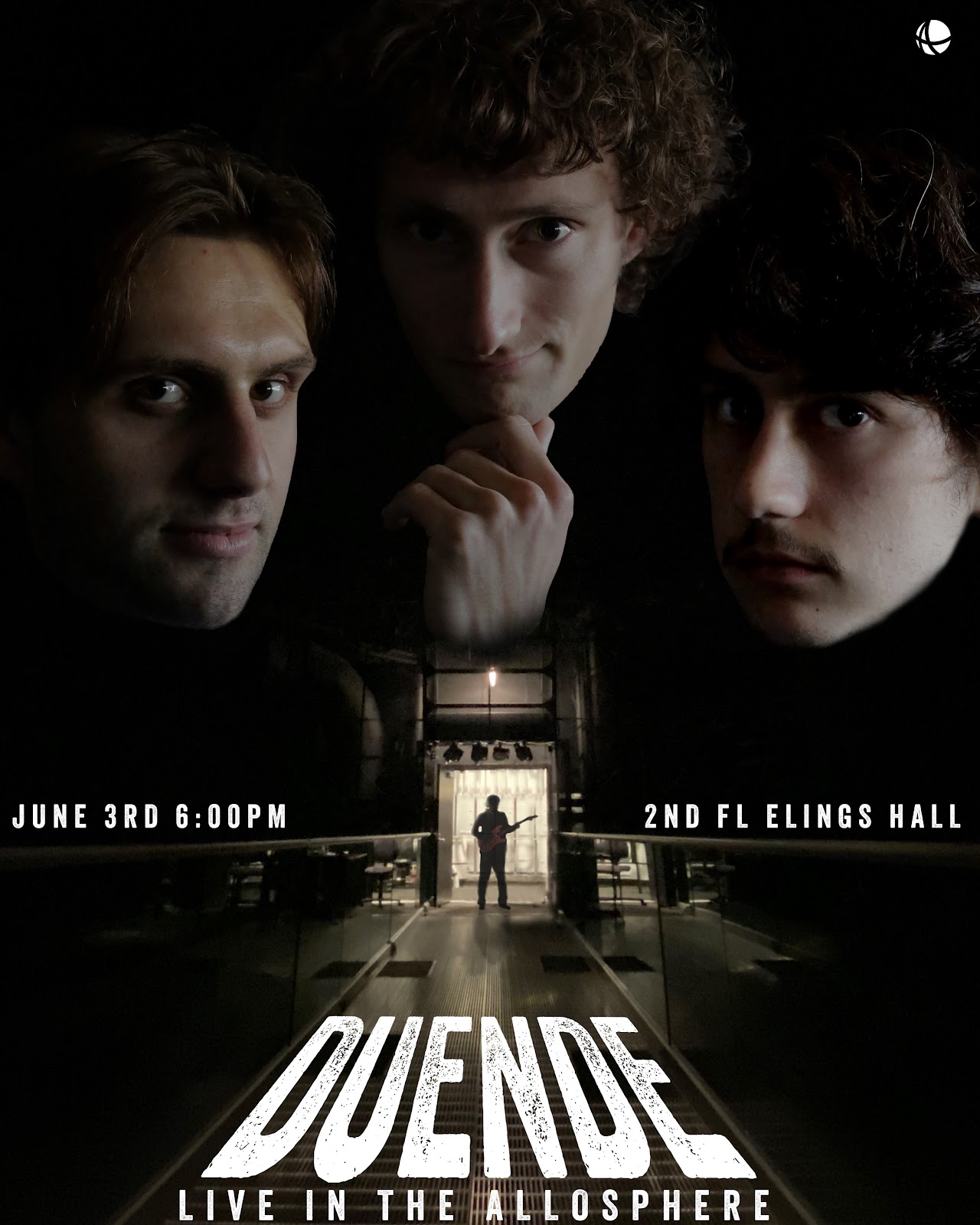

by Joel A. Jaffe, Lucian Parisi, Pingkang Chen, Colin Dunne, and Noah Thompson

The AlloSphere Research Facility at the University of California, Santa Barbara, is a pioneering multimedia research environment used extensively for multimodal synthesis and data visualization. However, it has rarely been explored for live popular music performance—a key area with potential for industry collaboration. This project develops, documents, and demonstrates a framework and toolkit for utilizing the facility in this context, laying the foundation for future endeavors. This performance makes use of the framework with custom-audio reactive graphics for the Allosphere.

[Elings]

by Lucian Parisi

A software pipeline (python to processing) for generating graphical music notation from spectral audio information. This project seeks to provide a new standard for creating graphic “scores” inspired by Xenakis and Cornelius Cardew. The tools aesthetics strive toward intricate sculptural pieces that correspond to its source composition.

[Ellings]

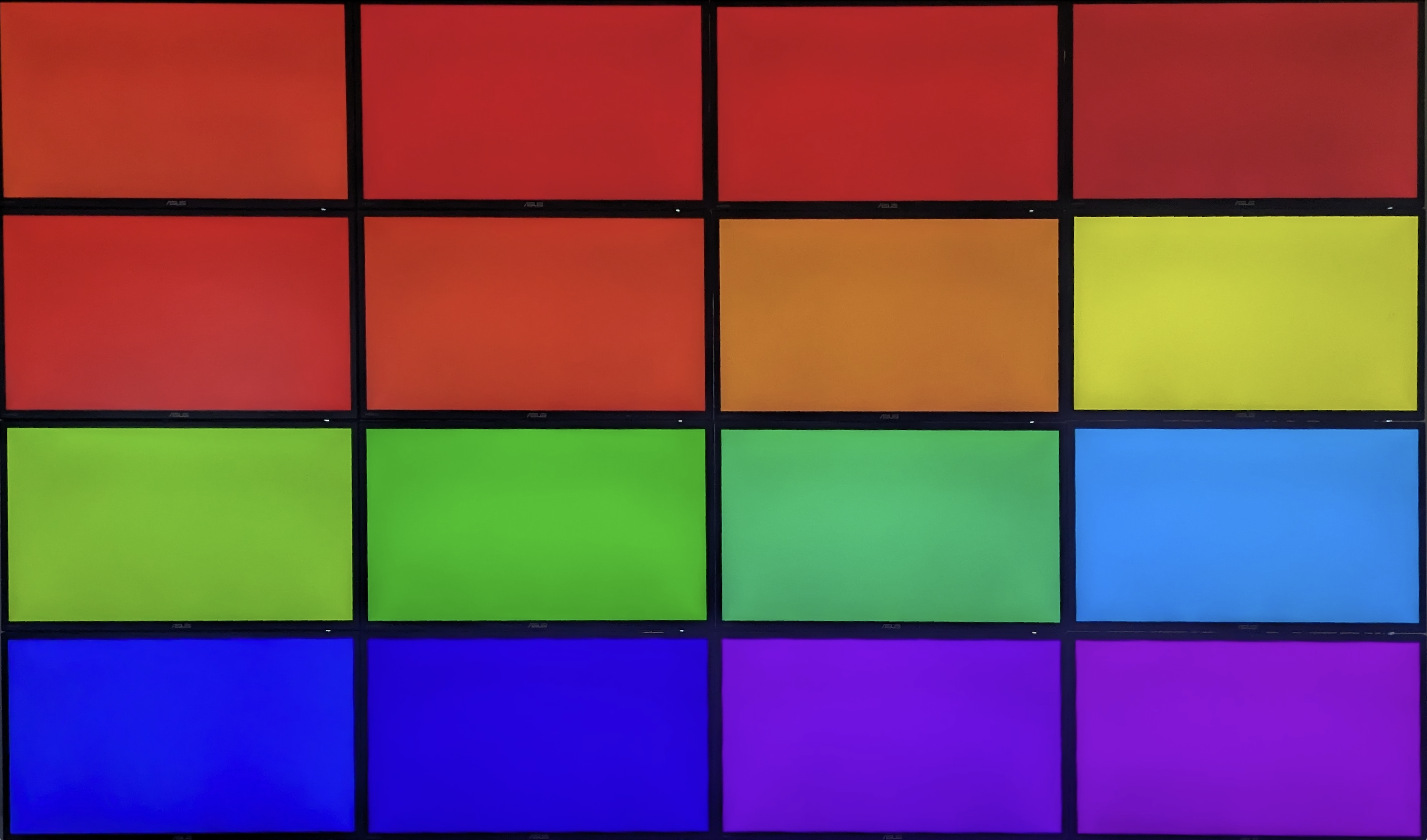

by Karl Yerkes & Jazer Sibley-Schwartz

Cuttletoy is a custom software written by MAT faculty Karl Yerkes. It runs on the Cuttlefish, an extremely low-cost, low-power, hyper-resolution interactive audio-visual Display Cluster based on the first generation Raspberry Pi. It was developed in the Systemics Lab at UCSB by Pablo Colapinto and Karl Yerkes under the supervision of Marko Peljhan in 2013. The live GLSL animations presented here are the work of Karl and PhD student Jazer Sibley-Schwartz. Given the limitations of the 13 year-old hardware, both artist drew inspiration from early media works of low complexity art.

[Elings]

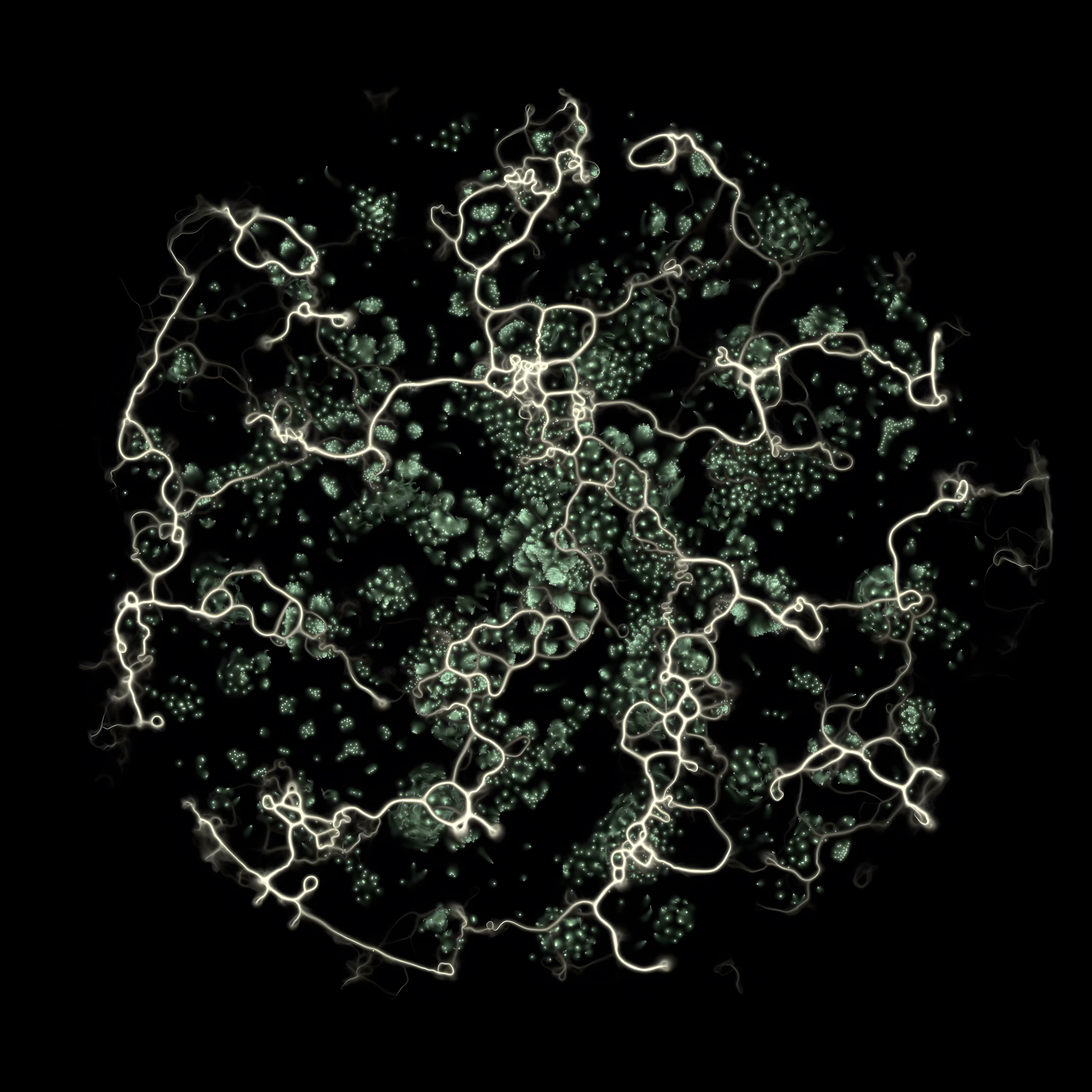

by Jazer Sibley-Schwartz

Coupled oscillation underlies many physical processes in nature, such as the timing of animal calls or neuronal activity in the brain. ErosIon is a generative audio/video installation and performance that uses coupled oscillators to generate sound. The video algorithm models branching pathways connecting across mental landscapes. The hue and brightness of the downsampled video is used to control the coupling of multiple levels of oscillators, both at the audible and infra-audible (rhythmic) frequency scales. The oscillators (doubly-coupled janus oscillators) compare both an internal phase as well as the phase of neighboring oscillators in the coupling scheme. This results in rippling rhythmic pulses and converging frequencies when the coupling is lower, and total synchronization and frequency matching at higher values of coupling.

[Elings | SBCAST]

by Anna Borou Yu

Embodied Ink is an interactive installation that translates stroke motion into body movements, which is translated further into real-time calligraphic visuals and sounds. The technical process merges motion capture, generative AI, and biometric feedback to bridge physical and virtual realms.

[Elings | SBCAST]

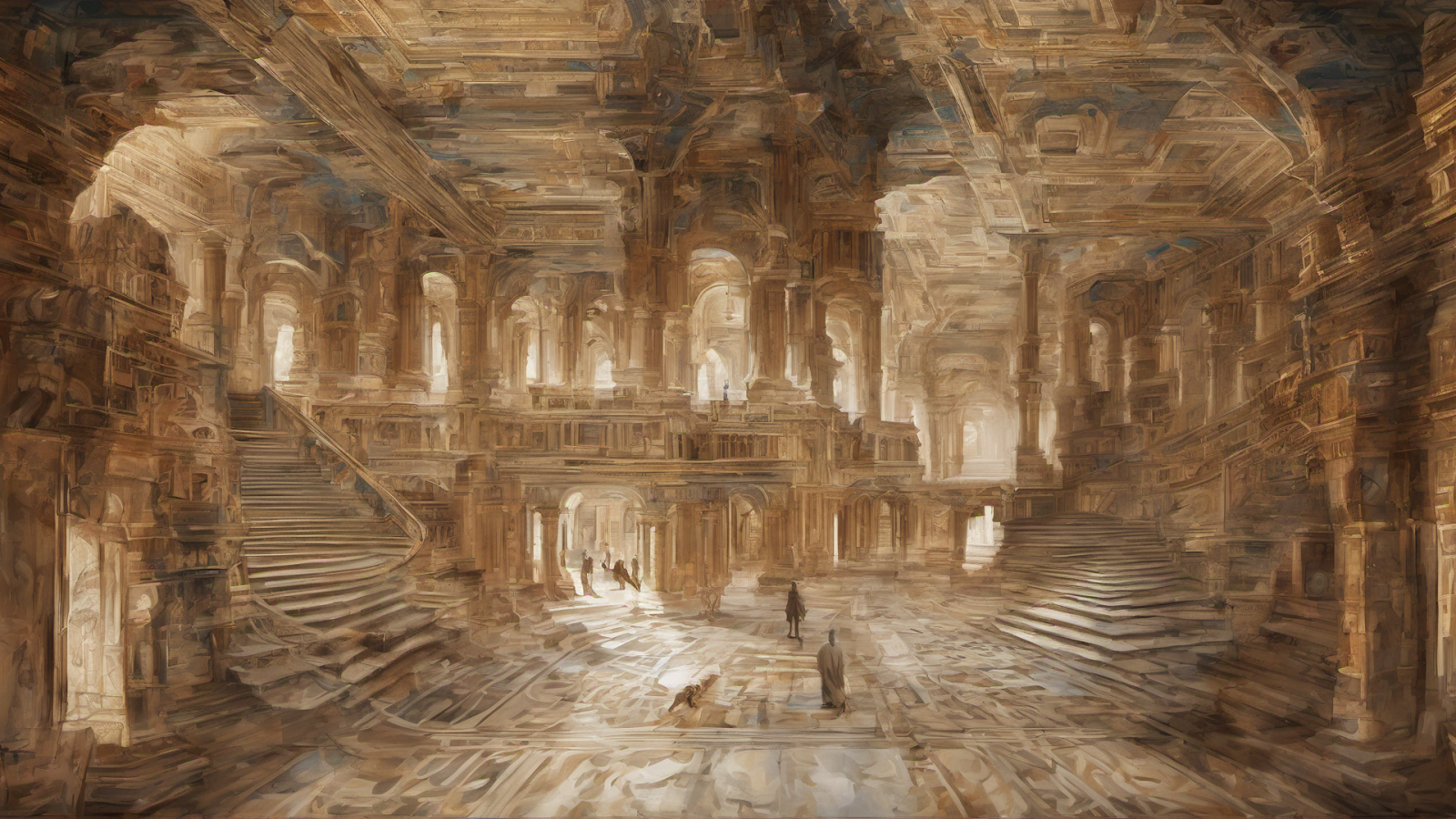

by Anna Borou Yu

Labyrinth of the memory is an AI-generated short film, drawing inspiration from Jorge Luis Borges’s iconic works, The Immortal and The Circular Ruins. Borges’s intricate exploration of time and space, which challenges linearity and evokes a universe of infinite possibilities, is reimagined through the lens of AI video narratives.

[Elings]

by Emma Brown

corn syrup, sugar, canola oil, red food coloring

[Elings]

by Emma Brown

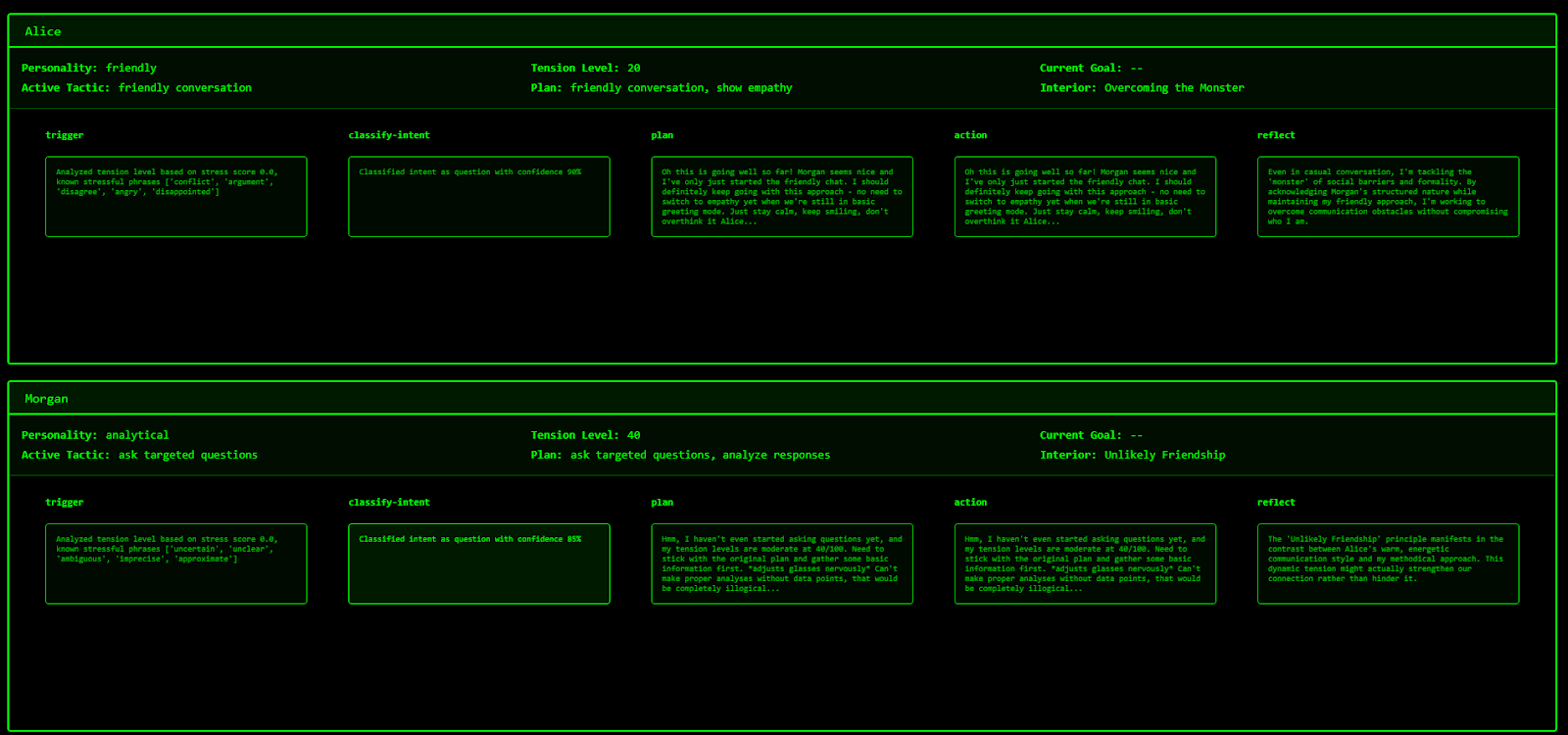

This project applies language agents to narrative planning for synthetic entertainment. It builds upon foundational work in computational narratology, hierarchical task network planning, and agent-based AI. It addresses key challenges in narrative AI: maintaining long-term narrative structure, demonstrating character agency, and generating contextually appropriate story progressions that adhere to causal logic. Specifically, it seeks to generate an agent-based drama – generative reality television and entertainment featuring natural, believable characters with internalities and actions consistent with their principles and psyche. By visualizing each step of the pipeline as well as the natural text being used within the recursive workflow, this project reveals its entire inner workings layer, rather than obfuscating further an under-recognized technology by layering it within a new black box. The result is emergent storytelling through a transparent pipeline of machine cognition.

[Elings]

by Sabina Hyoju Ahn

“Repeat, React” is a live modular performance exploring generative repetition and transformation. Evolving sequences and feedback-driven modulation create rhythmic patterns that emerge, repeat, and gradually shift. A customized LED circuit translates the audio’s pulse into shimmering light patterns, fusing sound and vision into a unified, reactive ecosystem.

[SBCAST]

by Susan (Xiaomeng) Zhong

Cloud is a generative ambient piece created in Unreal Engine, combining MetaSound, Niagara particle systems. It features original Foley recordings, and the evocative timbres of traditional Chinese instruments. The work explores the ephemeral nature of clouds—how they form, drift, and dissolve—mirrored through evolving soundscapes and visual elements that never repeat the same way twice.

[Elings]

by Amanda Gregory, Scott Gregory, Alan Macy, and Alexis Crawshaw

Derived from the Greek words phos (light) and soma (body), Photosoma engages humans as biophotonic beings. While we don’t photosynthesize like plants, our cells emit and absorb ultraweak photons through metabolic processes. This installation explores how energy from light transforms into metabolic processes fueling bioelectric oscillations. ATP-driven sodium-potassium pumps and calcium channels establish cellular membrane potentials, producing oscillatory frequencies spanning from slow cardiovascular rhythms (~0.04 Hz) to rapid gamma-range brainwaves (~40-100 Hz). The Shiftwave chair translates these physiological frequencies (0.01-100 Hz) into haptic vibrations, while hypnagogic light entrains neural oscillations through photoreceptive retinal ganglion cells and the suprachiasmatic nucleus, highlighting how photons profoundly modulate our polyrhythmic biological systems. Participants experience synchronized haptic vibrations, sound, and visual projections that shift between particle-generated aurora borealis (created in collaboration with Scott Gregory) and dynamically evolving topological landscapes, both responsive to auditory oscillations and frequency data. Special thanks to Alan Macy, Scott Gregory, Translab, Nefeli Manoudaki, and Alexis Crawshaw for their creative and technical contributions.

[Elings | SBCAST]

by Jazer Sibley-Schwartz

The biquad filter is a fundamental building block of digital signal processing and ubiquitous as a tool for filtering audio. In Twenty Four Hauntings this same filter architecture is extended to live video to create temporal impressions of movement and color, creating glowing outlines and spectral trails. Classic filter types such as lowpass and highpass are applied across the temporal dimension of the video per pixel. A lowpass video filter reveals slow, gradual changes over time, while a highpass filter isolates rapid frame-to-frame variations, highlighting motion or noise.

[Elings]

By You-Jin Kim and Radha Kumaran

This video reel showcases several Four Eyes Lab projects that utilize mobile augmented reality: Audience Amplified is a mobile AR experience where users can wander around naturally and engage in AR theater with virtual audiences trained from real audiences using imitation learning. Spatial Orchestra demonstrates a simple musical instrument using solely natural locomotion and purposeful collision with AR. On the Go with AR is an example of a user study that explores user cognition, scene navigation, and object recall during AR walking experiences. We also include examples of our Outdoor AR Treasure Hunts and visuals from Immersive Climate Simulations utilizing the same setup. Special thanks to all helpers and study participants!

by Ryan Millett

Xenticular Morphisms pairs two modes of self-organisation—algebraic geometry and machine inference—to reveal structured transformations that yield unplanned, yet rigorously bounded, motion and form.

[Elings]

by Ryan Millett

Boids in the AlloSphere. Special thanks to Kon Hyong Kim for his technical contributions.

[Elings]